#API Documentation Made Simple with Ai

Explore tagged Tumblr posts

Text

API Documentation Made Simple with Ai

Simplify API documentation with AI-powered tools. Create clear, accurate, and developer-friendly docs faster than ever.

0 notes

Text

Top Tools and Technologies to Use in a Hackathon for Faster, Smarter Development

Participating in a hackathon like those organized by Hack4Purpose demands speed, creativity, and technical prowess. With only limited time to build a working prototype, using the right tools and technologies can give your team a significant edge.

Here’s a rundown of some of the best tools and technologies to help you hack efficiently and effectively.

1. Code Editors and IDEs

Fast coding starts with a powerful code editor or Integrated Development Environment (IDE).

Popular choices include:

Visual Studio Code: Lightweight, extensible, supports many languages

JetBrains IntelliJ IDEA / PyCharm: Great for Java, Python, and more

Sublime Text: Fast and minimalistic with essential features

Choose what suits your language and style.

2. Version Control Systems

Collaborate smoothly using version control tools like:

Git: The most widely used system

GitHub / GitLab / Bitbucket: Platforms to host your repositories, manage issues, and review code

Regular commits and branch management help avoid conflicts.

3. Cloud Platforms and APIs

Leverage cloud services for backend, databases, or hosting without setup hassle:

AWS / Azure / Google Cloud: Often provide free credits during hackathons

Firebase: Real-time database and authentication made easy

Heroku: Simple app deployment platform

Explore public APIs to add extra features like maps, payment gateways, or AI capabilities.

4. Frontend Frameworks and Libraries

Speed up UI development with popular frameworks:

React / Vue.js / Angular: For dynamic, responsive web apps

Bootstrap / Tailwind CSS: Ready-to-use styling frameworks

These tools help build polished interfaces quickly.

5. Mobile App Development Tools

If building mobile apps, consider:

Flutter: Cross-platform, single codebase for iOS and Android

React Native: Popular JavaScript framework for mobile

Android Studio / Xcode: Native development environments

6. Collaboration and Communication Tools

Keep your team synchronized with:

Slack / Discord: Instant messaging and voice/video calls

Trello / Asana: Task and project management boards

Google Docs / Notion: Real-time document collaboration

Effective communication is key under time pressure.

7. Design and Prototyping Tools

Create UI/UX mockups and wireframes using:

Figma: Collaborative design tool with real-time editing

Adobe XD: Comprehensive UI/UX design software

Canva: Simple graphic design tool for quick visuals

Good design impresses judges and users alike.

8. Automation and Deployment

Save time with automation tools:

GitHub Actions / CircleCI: Automate builds and tests

Docker: Containerize applications for consistent environments

Quick deployment lets you demo your project confidently.

Final Thoughts

Selecting the right tools and technologies is crucial for success at a hackathon. The perfect mix depends on your project goals, team skills, and the hackathon theme.

If you’re ready to put these tools into practice, check out upcoming hackathons at Hack4Purpose and start building your dream project!

0 notes

Text

How to Launch a Hyperlocal Delivery App in 2025

Let’s face it: in today’s world, convenience isn’t a luxury—it’s an expectation. Whether it’s a forgotten charger, a midnight snack, or a pack of medicines, people want things delivered not just fast, but hyper fast. That’s where hyperlocal delivery comes in.

If you’ve ever used apps like Dunzo, Swiggy Genie, Zepto, or even Porter, you already know what this model looks like. It’s all about delivering essentials from nearby vendors to people in the same locality, usually within 30–45 minutes. In cities like Bengaluru, Delhi, and Mumbai, this model is not just thriving—it’s essential.

But here’s the kicker: there’s still space for new players.

Why Hyperlocal Still Has Room to Grow

You might wonder—if the big names are already out there, what’s the point of building another app?

The answer: focus and flexibility.

Most existing players serve multiple use-cases (groceries, documents, food, errands, etc.) in densely populated urban hubs. But what about tier-2 cities? What about niche markets like Ayurvedic products, pet supplies, or same-day rental pickups? There are countless hyperlocal niches that are still underserved—and that's where your opportunity lies.

Add to that the increasing digital literacy, mobile-first behavior, and a growing culture of solo living, and you’ve got a perfect storm for growth.

The Building Blocks of a Great Hyperlocal App

If you’re considering launching your own on-demand delivery service, here are the absolute must-haves:

1. Customer App

This is where users browse products, place orders, track deliveries, and make payments. Smooth navigation, real-time tracking, and quick reordering options are key.

2. Vendor App

Merchants or local sellers need their own interface to manage orders, mark availability, and update inventory. A simple dashboard can go a long way in keeping small businesses engaged.

3. Delivery Partner App

For the people making the actual deliveries, think route optimization, one-tap order acceptance, and a GPS-powered navigation feature.

4. Admin Panel

The command center where you can monitor all activities—users, vendors, transactions, support tickets, commissions, and performance analytics.

What Tech Stack Should You Use?

For those less tech-savvy, here’s a quick translation: the tech stack is the set of tools, programming languages, and platforms used to build your app.

For hyperlocal delivery apps, some popular choices are:

React Native or Flutter for mobile app development (iOS and Android with one codebase)

Node.js or Laravel for backend development

MongoDB or PostgreSQL for managing the database

Google Maps API for route tracking and delivery mapping

Razorpay or Stripe for secure, multi-mode payments

Now, if that sounds like a lot—don't worry. You don’t need to build everything from scratch.

Clone Solutions: A Smart Starting Point

One of the fastest ways to enter the hyperlocal market is by launching with a ready-made, customizable base solution. For example, if you’re inspired by Dunzo’s business model, you can get started with a Dunzo clone that mirrors the core functionality—on-demand pickup and drop services, real-time tracking, multiple delivery categories, and user-friendly UI.

This saves you time, money, and lets you focus on local partnerships, marketing, and growth.

Challenges You’ll Face (And How to Solve Them)

Every business has its roadblocks. Here are a few you should prepare for:

Vendor acquisition: Offer early adopters better visibility or commission-free orders for the first few months.

Delivery partner shortages: Use flexible shift-based hiring models and incentivize performance.

Last-mile delays: Incorporate AI-based route optimization and hyperlocal clustering to reduce waiting times.

User trust: Build features like OTP-based delivery, live support chat, and transparent cancellation/refund policies.

Remember—execution matters more than ideas.

Monetization Models That Work

Hyperlocal apps can make money in several ways:

Delivery charges: You can offer tiered pricing based on urgency or distance.

Commission from vendors: Take a percentage of each completed order.

Subscription plans: Let users subscribe to premium features like faster delivery or no delivery fees.

Ads and promotions: Offer vendors the ability to promote themselves inside the app.

If you design your pricing strategy right, you’ll generate consistent revenue without overwhelming users.

Why Choose Miracuves for Your App Development?

If you’re serious about launching a delivery app but don’t want to get lost in code, coordination, and complexity—Miracuves is a solid partner to consider.

With years of experience building white-label and custom app solutions for delivery-based businesses, Miracuves brings a mix of technical expertise, domain insight, and speed-to-market development. Whether you want a full-stack solution or just a base app to tweak and launch, they’ve got you covered.

They also offer scalable clones for popular models like Dunzo, UberEats, and GoPuff—so you can hit the ground running with something proven, yet flexible enough to make your own.

Final Thoughts

The hyperlocal delivery space in 2025 is packed with potential. As lifestyles get busier and expectations get faster, the demand for quick, reliable, and neighborhood-focused delivery will only grow.

If you’re thinking of launching something in this space, the time is now. Start with a niche. Choose the right tech partner. And build something that truly serves people close to home.

Because sometimes, the best business ideas are the ones that deliver—literally.

0 notes

Text

Things No One Tells You About Building a Crypto Exchange

So well,

Crypto exchange cannot be hard anymore why? Let share my experience

When I started building a crypto exchange, I thought the most difficult part would be the technology. Matching engines, charting systems, and crypto wallets are essential parts of a crypto exchange, and each one takes a lot of time and effort to build properly.

And yes, they take time, planning, and sharp execution.

But once the initial build was in progress, it became clear that the real work wasn’t just about writing code. It was about making decisions that balanced trust, performance, and user experience all at once. And that’s when the real work began.

If you're curious about what it takes to build a real crypto exchange, here���s what I’ve learned from actually doing it. No theory. Just honest lessons.

Building a Crypto Exchange- What You Need to Know

When I started working on a crypto exchange, I learned it’s not just about building something. It’s about thinking clearly, staying organized, and understanding what people really need. Here’s what I found out.

The Engine Is Just the Beginning

I first thought that building the trading engine was going to be the hardest part. Sure, it’s crucial to have an engine that can handle trades quickly and accurately. But once that was in place, it became clear that the rest of the platform was just as important, if not more.

Developing the order book, integrating trading pairs, and building a stable API for users and third-party tools are key steps. These parts help the platform operate in real time and ensure other services connect smoothly.

From the very start, I realized that building a platform people can depend on involves much more than technical development. It requires thoughtful design, proper structure, and a clear understanding of what users need.

Regulation Process

Most people think of regulations as something that holds them back. But for me, they ended up being a guide. Learning about the rules early on helped steer the development of the platform.

We focused on proper KYC and AML processes right from the beginning.

These checks are important not just for staying compliant, but for keeping users safe and managing risk. Users today expect to upload documents and verify their identity, and the system needs to handle that efficiently.

As I learned more, it became clear that understanding these rules early made building the platform easier. If you want to create an exchange, make sure you familiarize yourself with the regulations in the regions where you plan to operate.

Security Comes from More Than Just Technology

A lot of people think security is just about using the right tools or setting up cold crypto wallets. While those are important, I learned that real security starts behind the scenes. We implemented smart blockchain monitoring tools and made sure the entire team followed strict access controls.

These measures may not be visible to users, but they make a huge difference in how secure the system feels and operates. At every level, the team must focus on keeping everything secure, from how the code is written to how support tickets are handled.

Clear Design Helps Build Loyalty

It’s easy to focus on adding features or making the platform overly complex. But when it comes down to it, users want something simple and clear. They want to be able to log in, trade, and finish quickly.

We also used basic AI tools to guide users through some steps and help identify common errors or flag patterns. This gave us more insight into where people got stuck and allowed us to improve faster.

A clean, easy-to-navigate design keeps people coming back, while confusion can make them leave.

What Surprised Me the Most

When it came time to hand over the project, I decided to trust Hashcodex, a crypto exchange development company, to take it further. I gave them the entire project to develop from scratch.

From the beginning, they took full responsibility for the project. I shared the plan with them, and they improved it in ways I hadn’t thought of. They brought in their own expertise, asked relevant questions, and handled everything from fiat integration to dashboard flows.

What really impressed me was how well they understood the direction I wanted for the project. They didn’t just meet the requirements, they made the entire project better. The team at Hashcodex is excellent. If you're looking for a company to help build or improve your platform, I highly recommend them. They truly understand the work and are committed to delivering quality.

Conclusion

Building a crypto exchange is challenging, but it’s definitely possible if you take the right steps. It’s not just about technology; it’s about creating something people trust and enjoy using.

If you’re serious about launching your own platform or improving an existing one, I suggest you work with a team that knows crypto exchange development and cares about the details. Hashcodex is a great choice for anyone looking for a trusted partner in this field.

1 note

·

View note

Text

What Is AI Copilot Development and How Can It Revolutionize Your Business Operations?

Artificial Intelligence (AI) is no longer a futuristic concept—it's a present-day business asset. Among the most transformative innovations in this space is the rise of AI Copilots. These intelligent, task-oriented assistants are rapidly becoming indispensable in modern workplaces. But what exactly is AI Copilot development, and why should your business care?

In this blog, we’ll explore what AI Copilot development entails and how it can dramatically streamline operations, increase productivity, and drive strategic growth across your organization.

What Is an AI Copilot?

An AI Copilot is a specialized AI assistant designed to work alongside humans to perform specific tasks, offer contextual support, and automate complex workflows. Unlike general chatbots, AI Copilots are tailored for deeper integration into business systems and processes. Think of them as highly intelligent digital coworkers that can analyze data, suggest decisions, and execute actions in real time.

Some popular examples include:

GitHub Copilot for software development

Microsoft 365 Copilot for productivity tools

Salesforce Einstein Copilot for CRM tasks

These solutions are context-aware, learn from usage patterns, and adapt over time—making them much more than simple bots.

What Is AI Copilot Development?

AI Copilot development is the process of designing, building, and deploying AI-powered assistants that are customized to meet the unique needs of your business. It involves integrating AI models (such as GPT-4 or custom LLMs) with enterprise data, APIs, and workflows to create a seamless digital assistant experience.

Key components of Copilot development include:

Requirement analysis: Understanding specific user roles and pain points

Model selection & training: Choosing the right AI model and fine-tuning it with proprietary data

System integration: Connecting the copilot to tools like CRMs, ERPs, emails, analytics dashboards, and more

User interface (UI/UX): Creating intuitive chat-based or voice-based interfaces

Security & governance: Ensuring data privacy, access controls, and compliance

How AI Copilots Can Revolutionize Your Business Operations

Here’s how implementing AI Copilots can create tangible improvements across your organization:

1. Boost Productivity and Reduce Repetition

AI Copilots can handle routine tasks—scheduling meetings, summarizing reports, updating records—freeing your employees to focus on high-value work. The result? Less burnout and more innovation.

2. Accelerate Decision-Making

With real-time access to data and contextual recommendations, AI Copilots help employees make informed decisions faster. For example, a finance copilot could highlight trends and flag anomalies in your financial statements instantly.

3. Enhance Customer Experience

Customer service copilots can analyze prior interactions, pull up relevant data, and assist agents in delivering personalized support. Some can even resolve issues autonomously.

4. Unify Disparate Systems

Copilots can act as the connective tissue between siloed systems, allowing users to retrieve data or trigger workflows across multiple platforms without switching interfaces.

5. Enable Continuous Learning and Adaptation

With AI learning from user interactions and outcomes, copilots get smarter over time. This leads to continuously improving performance and relevance.

Use Cases Across Industries

Healthcare: AI Copilots assist clinicians by summarizing patient histories, suggesting treatment options, and automating administrative tasks.

Retail: Merchandising copilots forecast demand, optimize pricing strategies, and automate inventory planning.

Finance: AI assistants help with fraud detection, financial planning, and client advisory services.

Legal: Drafting contracts, summarizing cases, and reviewing documents can be made faster and more accurate with AI copilots.

Getting Started with AI Copilot Development

If you’re considering AI Copilot development for your business, start by:

Identifying critical workflows where automation or assistance would create the most value

Choosing a reliable development partner or platform with expertise in AI and enterprise systems

Starting small, then scaling with more complex tasks and integrations as the solution matures

Final Thoughts

AI Copilots are not just tools—they're strategic assets that can transform how your business operates. From eliminating repetitive work to unlocking new levels of efficiency and insight, investing in AI Copilot development could be the smartest move your organization makes this year.

0 notes

Text

API Buy Made Simple - Unlock Growth, Save Time, and Build Smarter with API Market

When it comes to building faster and smarter digital solutions, few choices are as impactful as a well-thought-out API Buy. Whether you’re a developer working on a new project or a business looking to expand capabilities, the right API can unlock powerful features without the need for building everything from scratch. At API Market, we’re here to make that decision simple, clear, and worthwhile. We’ve built a platform where developers and businesses can explore, test, and finalize API purchases confidently. Let’s break down why buying APIs is smart, how to do it right, and how API Market simplifies every step.

Why an API Buy Is Smarter Than Building In-House

In today's fast-moving tech world, building every functionality from the ground up can slow you down. With an API Buy, you instantly access ready-to-use functionalities - saving weeks or even months of development time. Instead of spending hours coding common features, you can focus on what makes your product unique.

APIs are not just tools - they’re building blocks that push your development forward. Whether you need payment integration, location tracking, or AI services, an API Buy allows you to get started instantly, without the overhead of building and testing from zero.

What Sets API Market Apart for Your API Buy

At API Market, we believe that finding and buying the right API should be effortless. Here’s what you can expect.

Wide Selection Across Categories

From logistics and payments to AI and communications, we offer APIs that suit a wide range of business needs.

Verified API Listings

Every API on our platform is reviewed and vetted, so your API Buy is secure, dependable, and backed by quality assurance.

Simple Pricing, No Surprises

We believe in clarity. All pricing models are transparent, so there are no hidden fees or confusing terms - just clean deals.

How to Choose the Right API for Your Project

Before you make an API Buy, keep these tips in mind.

Know What You Need

Define the exact functionality you're looking to add. This makes the search quicker and more targeted.

Review the API Documentation

Always go through the documentation. Good documentation means easier integration and smoother implementation.

Use Free Trials

Many APIs offer limited trials. Use them to test compatibility and performance before making a full purchase.

Make Your Next Move with API Market

The future of smart development starts with the right API Buy - and that’s exactly what API Market helps you achieve. Our platform is built to connect you with APIs that power innovation, reduce time to market, and improve your product’s performance.

Visit api.market to browse APIs, explore categories, and experience the easiest way to buy APIs for your next big idea.

0 notes

Text

The Evolution of Web Development: From Static Pages to Dynamic Ecosystems

Web development has undergone a dramatic transformation since the early days of the internet. What began as simple static HTML pages has evolved into complex, dynamic ecosystems powered by advanced frameworks, APIs, and cloud-based infrastructures. This evolution has not only changed how developers build websites but also how users interact with them. Today, web development is a multidisciplinary field that combines design, programming, and system architecture to create seamless digital experiences.

In the early 1990s, web development was primarily focused on creating static pages using HyperText Markup Language (HTML). These pages were essentially digital documents, interconnected through hyperlinks. However, as the internet grew, so did the demand for more interactive and functional websites. This led to the introduction of Cascading Style Sheets (CSS) and JavaScript, which allowed developers to enhance the visual appeal and interactivity of web pages. CSS enabled the separation of content and design, while JavaScript brought dynamic behavior to the front end, paving the way for modern web applications.

The rise of server-side scripting languages like PHP, Python, and Ruby marked the next significant shift in web development. These languages allowed developers to create dynamic content that could be generated on the fly based on user input or database queries. This era also saw the emergence of Content Management Systems (CMS) such as WordPress and Drupal, which democratized web development by enabling non-technical users to build and manage websites. However, as web applications became more complex, the need for scalable and maintainable code led to the development of frameworks like Django, Ruby on Rails, and Laravel.

The advent of Web 2.0 in the mid-2000s brought about a paradigm shift in web development. Websites were no longer just information repositories; they became platforms for user-generated content, social interaction, and real-time collaboration. This era saw the rise of AJAX (Asynchronous JavaScript and XML), which allowed web pages to update content without requiring a full page reload. This technology laid the groundwork for Single-Page Applications (SPAs), where the entire application runs within a single web page, providing a smoother and more app-like user experience.

Today, web development is dominated by JavaScript frameworks and libraries such as React, Angular, and Vue.js. These tools enable developers to build highly interactive and responsive user interfaces. On the back end, Node.js has revolutionized server-side development by allowing JavaScript to be used both on the client and server sides, creating a unified development environment. Additionally, the rise of RESTful APIs and GraphQL has made it easier to integrate third-party services and build microservices architectures, further enhancing the scalability and flexibility of web applications.

The proliferation of cloud computing has also had a profound impact on web development. Platforms like AWS, Google Cloud, and Microsoft Azure provide developers with scalable infrastructure, serverless computing, and managed databases, reducing the overhead of maintaining physical servers. DevOps practices, combined with Continuous Integration and Continuous Deployment (CI/CD) pipelines, have streamlined the development process, enabling faster and more reliable updates.

Looking ahead, the future of web development is likely to be shaped by emerging technologies such as Progressive Web Apps (PWAs), WebAssembly, and artificial intelligence. PWAs combine the best of web and mobile apps, offering offline capabilities and native-like performance. WebAssembly, on the other hand, allows developers to run high-performance code written in languages like C++ and Rust directly in the browser, opening up new possibilities for web-based applications. Meanwhile, AI-powered tools are beginning to automate aspects of web development, from code generation to user experience optimization.

In conclusion, web development has come a long way from its humble beginnings. It has grown into a sophisticated field that continues to evolve at a rapid pace. As new technologies emerge and user expectations rise, developers must adapt and innovate to create the next generation of web experiences. Whether it’s through the use of cutting-edge frameworks, cloud-based infrastructure, or AI-driven tools, the future of web development promises to be as dynamic and exciting as its past.

https://www.linkedin.com/company/chimeraflow

@ChimeraFlowAssistantBot

1 note

·

View note

Text

DeepSeek vs. OpenAI: The Battle of Open Reasoning Models

New Post has been published on https://thedigitalinsider.com/deepseek-vs-openai-the-battle-of-open-reasoning-models/

DeepSeek vs. OpenAI: The Battle of Open Reasoning Models

Artificial Intelligence (AI) transforms how we solve problems and make decisions. With the introduction of reasoning models, AI systems have progressed beyond merely executing instructions to thinking critically, adapting to new scenarios, and handling complex tasks. These advancements significantly impact industries such as healthcare, finance, and education. From improving diagnostic accuracy to detecting fraud and enhancing personalized learning, reasoning models are becoming essential tools for addressing real-world challenges.

DeepSeek and OpenAI have emerged as the two leading innovators in the field. DeepSeek has distinguished itself with its modular and transparent AI solutions developed to meet the specific needs of industries that demand precision and accountability. Its focus on adaptability has made it a preferred choice for businesses in healthcare and finance. Meanwhile, OpenAI continues to lead with versatile models like GPT-4, which are widely recognized for their ability to handle various tasks, including text generation, summarization, and coding.

As these two organizations advance AI reasoning capabilities, their competition results in significant progress in the field. Both DeepSeek and OpenAI are playing key roles in developing more innovative and more efficient technologies that have the potential to transform industries and change the way AI is utilized in everyday life.

The Rise of Open Reasoning Models in AI

AI has transformed industries by automating tasks and analyzing data. However, the rise of open reasoning models represents a new and exciting development. These models go beyond simple automation. They think logically, understand context, and solve problems dynamically. Unlike traditional AI systems that rely on pattern recognition, reasoning models analyze relationships and make decisions based on context, making them essential for managing complex challenges.

Reasoning models have already proven effective across industries. In healthcare, they analyze patient data to diagnose illnesses and recommend treatments. In autonomous vehicles, they process real-time sensor data to ensure safety. In finance, they detect fraud and predict trends by examining large datasets. Their flexibility and precision enable them to adapt to diverse needs and deliver reliable solutions.

This shift has increased competition among major AI companies, including DeepSeek, OpenAI, Google DeepMind, and Anthropic. Each brings unique benefits to the AI domain. DeepSeek focuses on modular and explainable AI, making it ideal for healthcare and finance industries where precision and transparency are vital. OpenAI, known for its general-purpose models like GPT-4 and Codex, excels in natural language processing and problem-solving across many applications.

DeepSeek’s model, R1, uses a modular framework, thus enabling businesses to tailor it to specific tasks. It excels in areas requiring deep reasoning, such as medical data analysis and financial pattern detection. OpenAI’s o1 model, based on its GPT architecture, is highly adaptable and performs exceptionally well in natural language processing and text generation.

Pricing also reflects their strategic priorities. DeepSeek offers flexible, cost-effective solutions for businesses of all sizes, while OpenAI provides powerful APIs and documentation, though its premium features may be more expensive for smaller organizations. Both companies are advancing rapidly. DeepSeek focuses on multi-modal reasoning and explainable AI, while OpenAI enhances contextual learning and explores quantum computing integration.

DeepSeek and OpenAI: A Detailed Comparison

Below is a comprehensive comparison of DeepSeek R1 and OpenAI o1, focusing on their features, performance, pricing, applications, and future developments. Both models represent AI advancements but cater to different needs and industries.

Features and Performance

DeepSeek R1: Precision and Efficiency

DeepSeek R1 is an open-source reasoning model for tasks requiring advanced problem-solving, logical inference, and contextual understanding. Developed with a budget of just $5.58 million, it has achieved remarkable efficiency, showing how smaller investments can yield high-performing models.

One of its prominent features is the modular framework that helps businesses customize the model for specific industry needs. This flexibility is enhanced by the availability of distilled versions, such as Qwen and Llama variants, which optimize performance for targeted applications while reducing computational requirements.

DeepSeek R1 relies on a hybrid training approach, combining Reinforcement Learning (RL) with supervised fine-tuning. The RL component enables the model to improve autonomously while fine-tuning ensures accuracy and coherence. This approach has helped DeepSeek R1 achieve substantial results in reasoning-heavy benchmarks:

When benchmarked on AIME 2024, an advanced mathematics test, DeepSeek R1 scored 79.8%, slightly higher than OpenAI o1.

On MATH-500, a high-school-level math problem-solving benchmark, it achieved 97.3%, surpassing OpenAI o1’s 96.4%. In SWE-bench, which evaluates software engineering tasks, DeepSeek R1 scored 49.2%, compared to OpenAI o1’s 48.9%.

However, in general-purpose benchmarks like GPQA Diamond and multitask language understanding (MMLU), DeepSeek R1 scored 71.5% and 90.8%, respectively, slightly lower than OpenAI o1.

OpenAI o1: Versatility and Scale

OpenAI o1 is a general-purpose model built on GPT architecture. It is designed to excel in natural language processing, coding, summarization, and more. With a broader focus, OpenAI o1 caters to diverse use cases, supported by its robust developer ecosystem and scalable infrastructure.

The model performs excellently in coding tasks, scoring 96.6% on Codeforces, a popular platform for algorithmic reasoning. It also leads in general knowledge benchmarks like MMLU, achieving 91.8%, slightly ahead of DeepSeek R1.

While it slightly lags in mathematics and reasoning-specific tasks, OpenAI o1 compensates with its speed and adaptability in NLP applications. For example, it excels in text summarization, question-answering, and creative writing, making it suitable for businesses with varied AI requirements.

Pricing and Accessibility

DeepSeek R1: Affordable and Open

DeepSeek R1’s most significant advantages are its affordability and open-source nature. The model is freely accessible on DeepSeek’s platform, offering up to 50 daily messages at no cost. This accessibility extends to its API pricing, which is 96% cheaper than OpenAI’s rates. The price is $2.19 per million tokens for output, compared to OpenAI’s $60 for the same volume. This pricing model makes DeepSeek R1 especially appealing to startups and small businesses.

Moreover, open-source licensing under MIT terms allows developers to customize, modify, and deploy the model without restrictive licensing fees. This makes it an attractive option for enterprises looking to integrate AI capabilities while minimizing costs.

OpenAI o1: Premium Features

OpenAI o1 offers a premium AI experience focusing on reliability and scalability. However, its pricing is significantly higher. The API costs $60 per million tokens for output, and advanced features are only available through subscription plans. While this makes OpenAI a more expensive option, its extensive documentation and developer support justify the cost for larger organizations with complex needs.

Applications

DeepSeek R1 Applications

DeepSeek R1 is ideal for industries that require precision, transparency, and cost-effective AI solutions. Its focus on reasoning-heavy tasks makes it especially useful in scenarios where explainable AI is critical. Potential applications include:

Healthcare: DeepSeek R1 can analyze complex medical data, identify patterns in patient histories, and assist in diagnosing conditions, such as detecting early signs of diseases with high accuracy. These capabilities can be valuable in research hospitals, diagnostic labs, and telemedicine platforms.

Finance: The model’s ability to detect complex patterns makes it suitable for fraud detection and risk assessment. It can assist financial institutions in monitoring transactions and identifying irregularities to reduce financial crime rates.

Education: DeepSeek R1 can power adaptive learning systems by tailoring educational content to individual learners’ progress and needs. This can improve engagement and learning outcomes in online education platforms.

Legal and Compliance: With its modular design, DeepSeek R1 can help in legal contract analysis and compliance monitoring, making it valuable for law firms and regulatory industries.

Scientific Research: Its reasoning capabilities allow it to assist in hypothesis testing and data interpretation, supporting research institutions working on complex problems like genomics or material science.

OpenAI o1 Applications

OpenAI o1, with its general-purpose design, has already demonstrated its utility across a wide range of industries. Its versatility and adaptability make it well-suited for tasks involving natural language processing, creative output, and customer interaction. Common applications include:

Customer Service: OpenAI o1 has been widely deployed in creating chatbots that provide human-like interactions. These chatbots are used by e-commerce platforms, banking institutions, and tech support systems to handle customer inquiries and improve satisfaction.

Content Creation: Businesses frequently use OpenAI o1 to generate high-quality text, including marketing materials, product descriptions, and long-form reports. Its ability to produce coherent and creative content saves marketing teams time and effort.

Coding and Development: With its strong coding assistance capabilities, OpenAI o1 helps developers debug code, generate snippets, and improve software development efficiency.

Creative Industries: OpenAI o1 has been applied to generate storylines, scripts, and even lyrics for creative projects, making it a favourite in the media and entertainment industries.

Future Prospects and Trends

DeepSeek’s Roadmap

DeepSeek is investing in multi-modal reasoning, aiming to integrate visual and text-based reasoning for more comprehensive AI applications. Its emphasis on explainable AI ensures transparency and trust, making it a preferred choice in ethical and regulated industries like healthcare and finance. Additionally, DeepSeek plans to expand its distilled model lineup, offering even more efficient and specialized solutions.

OpenAI’s Vision

OpenAI continues to innovate with plans to enhance contextual learning and integrate its models with emerging technologies, such as quantum computing. CEO Sam Altman recently emphasized the importance of scaling computational resources to achieve breakthroughs in AI. OpenAI is also accelerating its release schedule to stay competitive, with a focus on developing Artificial General Intelligence (AGI). These advancements aim to broaden the applicability of OpenAI’s models while maintaining their reliability and scalability.

Public Perception and Trust Concerns

Regarding AI adoption, trust and public perception matter as much as performance. DeepSeek has drawn some concerns about bias, especially on sensitive or controversial topics. Users have noticed that its responses sometimes avoid strong opinions or critical perspectives, raising questions about how its training data and development environment might influence its outputs. This can be a sticking point for industries or applications where neutrality is critical.

On the other hand, OpenAI has built a solid reputation for being reliable and consistent, but it’s not without its challenges either. As a proprietary platform, OpenAI’s models sometimes feel like a black box, making it harder to understand how decisions are made. This can frustrate users in industries where transparency is non-negotiable, like healthcare or compliance.

Both companies have opportunities to build more trust. DeepSeek’s open-source model has the potential to enhance transparency and collaboration, which could help it address these concerns. Meanwhile, OpenAI’s strong developer ecosystem and track record make it a dependable choice for many. How each company handles these trust issues will be key to how widely they are adopted in the long run.

The Bottom Line

The competition between DeepSeek and OpenAI represents a pivotal moment in AI development, where reasoning models redefine problem-solving and decision-making. DeepSeek emerges with its modular, cost-effective solutions tailored for industries that demand precision, while OpenAI excels in versatility and adaptability with its robust general-purpose models.

Both companies advance their technologies to shape the future of AI, bringing transformative changes across healthcare, finance, education, and more. Their innovations represent the potential of reasoning models and highlight the importance of transparency, trust, and accessibility in adopting AI.

#2024#Accessibility#adoption#AGI#ai#AI adoption#AI development#AI systems#Analysis#anthropic#API#APIs#applications#approach#architecture#artificial#Artificial General Intelligence#Artificial Intelligence#assessment#automation#autonomous#autonomous vehicles#banking#benchmark#benchmarks#Bias#black box#box#CEO#change

0 notes

Text

English to Tamil Translation: Accurate translation by Devnagri AI

Do you want to find the Best English To Tamil Translation? Devnagri AI Solution understands very well that accurate and culture-sensitive translation is very important to us. We have managed to build a team of native speakers of various languages and professional translators, making your content translate flawlessly and fluently. Devnagri AI is one of the most reliable solution providers, providing translation solutions from English to Tamil Translation.

Why Devnagri AI Solution for Hindi to Tamil Translation?

We have a few good reasons that we believe would help you decide why you should go for English To Tamil Translation if you are in need of the same.

High-end APIs: Our tools are multilingual. Possesses sharp expertise in cultural nuances and regional differentiations. Hence, all the translations done by AI have not been simple word-for-word translations but proper contextual translations of meanings as well.

Quality Control Process: Here in our translation house, we are very stringent with our quality control processes. All translations conducted within the organization undergo numerous rounds of editing to be made flawless in terms of quality and error-free.

On-Time Delivery: We value your time. Our smooth project management ensures that every translation project is delivered in time to meet your timelines every time.

Confidentiality: We are cognizant of the sensitivity that includes privacy and confidentiality. All your documents receive utmost care and discretion.

Our solutions

We are highly experienced in English To Tamil Translation, among others include;

Document Translation: Legal documents, medical reports, technical manuals, and academic papers- we translate all kinds of documents.

Website Translation: Translate the website content in Tamil to tap a larger market. Our DOTA Web makes sure that your website retains its original flavor and intent.

Voice Bot: From marketing material to corporate communication, our Multilingual conversational AI bot helps your business reach Tamil-speaking clients and partners.

Devnagri AI can translate subtitles, scripts, and other media content so your message finally reaches Tamil or Hindi speaking people.

Why English to Tamil Translation is Important?

It is the language, Tamil, that is one of the oldest in the world and yet it's spoken by millions and mostly in Tamil Nadu, Puducherry, and some regions of Sri Lanka, Singapore, and Malaysia. With reach and acceptance, a huge scope for expansion can be tapped into a massive audience, which could be pretty fruitful and beneficial for your business and outreach.

Getting started with our English To Tamil Translation solutions is pretty easy. You need to contact us for your requirements and we will give you a customized quote. We are here to assist you in every step to ensure smooth and hassle-free translation. Contact us if you're looking forward to translating Hindi to Tamil or vice versa.

Summing Up

Are you ready to bridge that gap in language? Call us today at Devnagri AI Solution for all your English to Tamil or English to Hindi Translation needs. Our team is more than eager to help you communicate effectively and connect with Tamil speaking audiences. You can request a demo or check our products from the website.

#English to Tamil#Tamil translation#Language Translation#Tamil Language#TranslationServices#English to Tamil translation#LanguageTranslation#Website Translation#Document translation#document translation service#EnglishToTamilTranslation#Document Translation

0 notes

Text

AI Documentation Assistant | Smart AI Doc Writer

AI Documentation Assistant is an intelligent tool designed to streamline and simplify your documentation process. Whether you're drafting technical manuals, user guides, meeting notes, or internal wikis, our AI-powered assistant helps you write faster, improve clarity, and maintain consistency. Say goodbye to repetitive writing and hello to smart, structured, and error-free documentation—effortlessly.

0 notes

Text

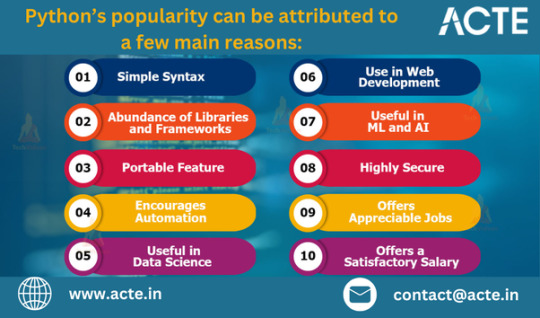

Why Python Reigns Supreme: A Comprehensive Analysis

Python has grown from a user-friendly scripting language into one of the most influential and widely adopted languages in the tech industry. With applications spanning from simple automation scripts to complex machine learning models, Python’s versatility, readability, and power have made it the reigning champion of programming languages. Here’s a comprehensive look at why Python has reached this esteemed position and why it continues to dominate.

Considering the kind support of Learn Python Course in Hyderabad Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

1. Readable Syntax, Rapid Learning Curve

Python is celebrated for its straightforward, readable syntax, which resembles English and minimizes the use of complex symbols and structures. Unlike languages that can be intimidating to beginners, Python's design philosophy prioritizes readability and simplicity, making it approachable for people new to programming. This ease of understanding is crucial in educational settings, where Python has become a top choice for teaching programming fundamentals.

Python’s gentle learning curve allows beginners to focus on mastering logic and problem-solving rather than wrestling with complicated syntax, making it a universal favorite.

2. A Thriving Ecosystem of Libraries and Tools

Python boasts a rich ecosystem of libraries and tools, which provide ready-made functionalities that allow developers to get straight to work without reinventing the wheel. For instance:

Data Science and Machine Learning: Libraries like pandas, NumPy, scikit-learn, and TensorFlow offer robust tools for data manipulation, statistical analysis, and machine learning.

Web Development: Frameworks like Django and Flask make it simple to build and deploy scalable web applications.

Automation: Tools like Selenium and BeautifulSoup enable developers to automate tasks, scrape websites, and handle repetitive processes efficiently.

Visualization: Libraries such as Matplotlib and Seaborn offer powerful ways to create data visualizations, a crucial feature for data analysts and scientists.

These libraries, created and maintained by a vast community, save developers time and effort, allowing them to focus on innovation rather than the details of implementation.

3. Multi-Paradigm Language with Versatile Use Cases

Python is inherently a multi-paradigm language, which means it supports procedural, object-oriented, and functional programming. This flexibility allows developers to choose the best approach for their projects and adapt their programming style as necessary. Python is ideal for a wide range of applications, including:

Web and App Development: Python’s frameworks, such as Django and Flask, are widely used for building web applications and APIs.

Data Science and Analytics: With its powerful libraries, Python is the language of choice for data analysis, manipulation, and visualization.

Machine Learning and Artificial Intelligence: Python has become the industry standard for machine learning, AI, and deep learning, with major frameworks optimized for Python.

Automation and Scripting: Python excels in automating repetitive tasks, from data scraping to system administration.

This adaptability means that developers can work across projects in different domains, without needing to switch languages, increasing productivity and consistency.

. Enrolling in the Best Python Certification Online can help people realise Python's full potential and gain a deeper understanding of its complexities.

4. The Power of the Community

Python’s growth and success are deeply rooted in its active, vibrant community. This worldwide community contributes to Python’s extensive documentation, support forums, and open-source projects, ensuring that resources are available for developers at all levels. Additionally, Python’s open-source nature allows anyone to contribute to its libraries, frameworks, and core features. This constant improvement means Python stays relevant, innovative, and well-supported.

The Python community is also highly collaborative, hosting events, tutorials, and conferences like PyCon, which facilitate learning and networking. With a community of developers continuously advancing the language and sharing their knowledge, Python remains a constantly evolving, resilient language.

5. Dominance in Data Science, Machine Learning, and AI

Python’s popularity surged with the rise of data science, machine learning, and AI. As industries recognized the value of data-driven decision-making, demand for data analytics and predictive modeling grew rapidly. Python, with its well-developed libraries like pandas, scikit-learn, TensorFlow, and Keras, became the preferred language in these fields. These libraries allow developers and researchers to experiment, build, and scale machine learning models with ease, fostering innovation in AI-driven industries.

The popularity of Jupyter Notebooks also propelled Python’s use in data science. This interactive development environment allows users to combine code, visualizations, and explanations in a single document, making data exploration and sharing more efficient. As a result, Python has become a staple in data science courses, research, and industry applications.

6. Cross-Platform Compatibility and Open Source Advantage

Python’s cross-platform compatibility allows it to run on various operating systems like Windows, macOS, and Linux, making it ideal for development in diverse environments. Python code is generally portable, which means that scripts written on one platform can often run on another with little to no modification, providing developers with added flexibility and ease of deployment.

As an open-source language, Python is free to use and backed by a vast global community. The lack of licensing costs makes Python an accessible choice for startups, educational institutions, and corporations alike, contributing to its widespread adoption. Python’s open-source nature also allows the language to evolve rapidly, with new features and libraries continuously added by its active user base.

Python’s Future as a Leading Language

Python’s blend of readability, flexibility, and community support has catapulted it to the top of the programming world. Its stronghold in data science, AI, web development, and automation ensures it will remain relevant across a broad spectrum of industries. With its rapid development, Python is poised to tackle emerging challenges, from ethical AI to quantum computing. As more people turn to Python to solve complex problems, it will continue to be a language that’s easy to learn, powerful to use, and versatile enough to stay at the cutting edge of technology.

Whether you’re a newcomer or a seasoned developer, Python is a language that opens doors to endless possibilities in the world of programming.

0 notes

Text

AI Made Simple with Cogniflow: No Code, Endless Possibilities

Cogniflow is a powerful no-code AI platform designed to bring the capabilities of machine learning to everyone, regardless of their technical skills. With Cogniflow, you can create and deploy custom AI models to automate tasks like text extraction, sentiment analysis, and image recognition—all without writing a single line of code.

Core Functionality: Cogniflow allows users to train and deploy AI models for a variety of data types, including text, images, and audio. It supports over 50 languages and is ideal for automating tasks such as document analysis, speech recognition, and image classification.

Key Features:

No-Code AI Model Creation: Create custom AI models without any coding skills, making machine learning accessible to non-technical users.

Automated Document and Image Analysis: Extract data from documents, recognize objects in images, or identify audio elements, all through easy-to-follow workflows.

API Integrations: Seamlessly connect your AI models with popular tools like Google Sheets, Zapier, and Excel for enhanced automation.

Multi-Use Applications: Use AI models for tasks like resume parsing, customer sentiment analysis, or even leaf disease detection.

Benefits:

Ease of Use: Designed for non-coders, Cogniflow makes creating and deploying AI models simple and intuitive.

Cost Efficiency: No need for expensive data science teams—use Cogniflow to automate and enhance processes without major investments.

Scalable Solutions: Ideal for small businesses, large enterprises, or even individual users looking to improve productivity.

Start building AI models in minutes—no coding required. Visit aiwikiweb.com/product/cogniflow/

#NoCode#AI#Cogniflow#Automation#Productivity#AIForEveryone#MachineLearning#BusinessSolutions#NoCodeAI#TechInnovation

0 notes

Text

What is Pixiebrix? Extend your apps to fit the way you work in

PixieBrix is a low-code platform that allows users to customize and automate web applications directly within their browser. It's designed for users who want to enhance their workflow by adding custom modifications, known as "mods," to any website or web app. These mods can range from simple UI tweaks to complex automation workflows that integrate with various third-party services.

Key features of PixieBrix include:

Custom Browser Mods: Users can create custom mods using a combination of pre-made "bricks," which are building blocks for different actions like data extraction, form submissions, and API integrations. These mods can be deployed directly on the websites you already use.

Low-Code Interface: PixieBrix is accessible even to those with minimal coding experience. The platform provides a point-and-click interface to create mods, making it easy to add new functionalities without needing to write extensive code.

Enterprise-Ready: For business users, PixieBrix offers robust features like role-based access control, integration with enterprise SSO providers like Okta and Active Directory, and detailed telemetry for managing deployments at scale.

Integration with Third-Party Tools: PixieBrix supports integration with various third-party applications, enabling users to automate tasks across different tools seamlessly.

Community and Support: PixieBrix has an active community and offers extensive resources, including templates, documentation, and certification courses to help users get the most out of the platform.

PixieBrix is ideal for users looking to enhance their productivity by automating repetitive tasks and customizing their web experience. It's particularly valuable in business environments where efficiency and customization can significantly impact productivity.

For more details or to get started, you can visit their official website at PixieBrix.

0 notes

Text

Cloud Composer: Apache Airflow Workflow Control Service

Cloud Composer Google

An Apache Airflow-based workflow orchestration solution that is completely managed.

Composer Cloud

Advantages

Completely controlled orchestration of workflows

Because Composer Cloud is managed, and it is compatible with Apache Airflow, you can concentrate on composing, scheduling, and monitoring your workflows instead of worrying about resource provisioning.

Connects to more Google Cloud goods

Users may fully orchestrate their pipeline thanks to end-to-end interaction with Google Cloud products, such as BigQuery, Dataflow, Dataproc, Datastore, Cloud Storage, Pub/Sub, and AI Platform.

Allows for multiple and hybrid clouds

Regardless of whether your pipeline is entirely hosted on Google Cloud, exists in various clouds, or is on-premises, you can author, plan, and monitor your workflows with just one orchestration tool.

Google Cloud Composer

Two-way and multicloud

Organise workflows that go back and forth between on-premises and public cloud to facilitate your cloud migration or to keep your hybrid data environment running well. To get a unified data environment, create workflows that link data, processing, and services across clouds.

Accessible

Because it is based on Apache Airflow, users can be mobile and not be locked into any one platform. Customers can avoid lock-in with this open source project that Google is donating back to, and it integrates with a wide range of platforms that will only increase in number as the Airflow community grows.

Simple orchestration

Python is used to easily configure Cloud Composer pipelines as directed acyclic graphs (DAGs), accessible to all users. Troubleshooting is made simple with one-click deployment, which provides rapid access to a comprehensive library of connectors and several graphical depictions of your workflow in operation. Your directed acyclic graphs automatically synchronise to keep your jobs on time.

Cloud composer documentation

About Cloud Composer

With Cloud Composer, a fully managed workflow orchestration solution, you can plan, organise, oversee, and control workflow pipelines that connect on-premises data centres and clouds.

Cloud Composer runs on the Python programming language and is based on the well-known Apache Airflow open source project.

You can take advantage of all the features of Apache Airflow without any installation or administrative work by switching to it from a local instance. You can focus on your workflows rather than your infrastructure by using Cloud Composer to easily create managed Airflow environments and use Airflow-native features like the robust Airflow web interface and command-line tools.

Version differences for Cloud Composer

Major Cloud Composer versions

The following are the major versions of Composer Cloud:

Cloud Composer 1: you can manually scale the environment and deploy the infrastructure to your networks and projects.

Cloud Composer 2: The environment’s cluster in this version automatically adjusts to the demands on its resources.

Cloud Composer 3: This version hides infrastructure elements, such as the environment’s cluster and dependencies on other services, and simplifies network configuration.

Workflows known as Airflow and Airflow DAG

A workflow in data analytics is a set of operations for obtaining, processing, evaluating, or using data. Workflows in Airflow are generated using the use of DAGs, or “Directed Acyclic Graphs”.

A directed acyclic graph (DAG) is a set of tasks that you wish to plan and execute, arranged to show their dependencies and relationships. Python files are used to generate DAGs, and these files use code to specify the DAG structure. Ensuring that each work is completed on time and in the correct order is the goal of the DAG.

In a DAG, every task can represent nearly anything. For instance, a single task could carry out any of the following tasks:

Getting ready for data intake

Keeping an eye on an API

Transmitting an electronic message

Managing a pipeline

You can manually start DAGs or have them run in reaction to events, such modifications in a Cloud Storage bucket, in addition to scheduling DAGs. Refer to Triggering DAGs for additional details.

Environments for Cloud Composer

Self-contained Airflow deployments built on Google Kubernetes Engine are known as Cloud Composer environments. They use Airflow’s built-in connectors to interface with other Google Cloud services. In a single Google Cloud project, you can establish one or more environments in any supported area.

The Google Cloud services that power your workflows and every Airflow component are provisioned via Cloud Composer. An environment’s primary constituents are:

GKE cluster: Airflow schedulers, triggerers, workers, and other airflow components process and execute DAGs by running as GKE workloads in a single cluster that is customised for your environment.

In addition, the cluster is home to additional Composer components including Composer Agent and Airflow Monitoring, which collect metrics to be uploaded to Cloud Monitoring, logs to be stored in Cloud Logging, and assist in managing the Composer environment.

Apache Airflow UI is run by the web server known as the Airflow web server.

Airflow database: The Apache Airflow metadata is stored in this database.

Cloud Storage bucket: A Cloud Storage bucket is linked to your environment by Cloud Composer. The DAGs, logs, customised plugins, and environment data are all kept in this bucket, which is also referred to as the environment’s bucket.

Interfaces for Cloud Composer

Interfaces for controlling environments, individual DAGs, and Airflow instances running within environments are provided by this.

For instance, you can use Terraform, the Cloud Composer API, the Google Cloud CLI, or the Google Cloud console to construct and configure this environments.

As a further example, you can use the Google Cloud console, the native Airflow UI, or the Google Cloud CLI and Airflow CLI commands to manage DAGs.

Features of Cloud Composer’s airflow

You can control and make use of Airflow capabilities like these with Cloud Composer:

Airflow DAGs: Using the native Airflow UI or the Google Cloud console, you may add, modify, remove, or trigger Airflow DAGs.

Airflow configuration options: You can set custom settings in place of Cloud Composer’s default values for these configuration options. Certain configuration parameters in Cloud Composer are blocked, meaning that you are unable to modify their values.

Airflow Intersections.

Airflow User Interface.

Airflow Command Line Interface.

Custom plugins: In your Cloud Composer environment, you can install custom Airflow plugins, such as hooks, sensors, interfaces, or custom, in-house Apache Airflow operators.

Python dependencies: You can install dependencies from private package repositories, such as those found in the Artefact Registry, or from the Python Package Index within your environment. Plugins are another option if the dependencies are not listed in the package index.

Recording and observing DAGs, Airflow elements, and Cloud Composer settings:

The logs folder in the environment’s bucket and the Airflow web interface both allow you to view Airflow logs linked to individual DAG tasks.

Environment metrics and cloud monitoring logs for Cloud Composer environments.

Controlling access in Cloud Composer

At the Google Cloud project level, security is managed by you, and you can designate IAM roles that let specific users build or edit environments. Someone cannot access any of your environments if they do not have the necessary Cloud Composer IAM role or access to your project.

You can also use Airflow UI access control, which is based on the Apache Airflow Access Control concept, in addition to IAM.

Cloud Composer Costs

You only pay for the amount of usage with Cloud Composer, which is expressed in terms of vCPU/hour, GB/month, and GB transferred/month. Because it employs numerous Google Cloud products as building pieces, we have multiple pricing tiers.

All levels of consumption and continuous usage are priced equally. See the price page for further details.

Read more on govindhtech.com

#Cloud#ComposerCloud#GoogleCloud#Troubleshooting#CloudStorage#AI#Airflow#news#technews#technology#technologynews#technologytrends#govindhtech

1 note

·

View note

Text

Core PHP vs Laravel – Which to Choose?

Choosing between Core PHP and Laravel for developing web applications is a critical decision that developers and project managers face. This blog aims to explore the unique aspects of each, current trends in 2024, and how new technologies influence the choice between the two. We'll dive into the differences between core php and Laravel ecosystems, performance, ease of use, community support, and how they align with the latest technological advancements.

Introduction to Core PHP and Laravel

Core PHP refers to PHP in its raw form, without any additional libraries or frameworks. It gives developers full control over the code they write, making it a powerful option for creating web applications from scratch. On the other hand, Laravel is a PHP framework that provides a structured way of developing applications. It comes with a set of tools and libraries designed to simplify common tasks, such as routing, sessions, caching, and authentication, thereby speeding up the development process for any business looking to hire php developers.

Unique Aspects of Core PHP and Laravel

Core PHP:

Flexibility and Control: Offers complete freedom to write custom functions and logic tailored to specific project requirements.

Performance: Without the overhead of a framework, Core PHP can perform faster in scenarios where the codebase is optimized and well-written.

Learning Curve: Learning Core PHP is essential for understanding the fundamentals of web development, making it a valuable skill for developers.

Laravel:

Ecosystem and Tools: Laravel boasts an extensive ecosystem, including Laravel Vapor for serverless deployment, Laravel Nova for administration panels, and Laravel Echo for real-time events.

MVC Architecture: Promotes the use of Model-View-Controller architecture, which helps in organizing code better and makes it more maintainable.

Blade Templating Engine: Laravel’s Blade templating engine simplifies tasks like data formatting and layout management without slowing down application performance.

Trends in 2024

Headless and Microservices Architectures: There's a growing trend towards using headless CMSes and microservices architectures. Laravel is particularly well-suited for this trend due to its ability to act as a backend service communicating through APIs.

Serverless Computing: The rise of serverless computing has made frameworks like Laravel more attractive due to their compatibility with cloud functions and scalability.

AI and Machine Learning Integration: Both Core PHP and Laravel are seeing libraries and tools that facilitate the integration of AI and machine learning functionalities into web applications.

New Technologies Influencing PHP Development

Containerization: Docker and Kubernetes are becoming standard in deployment workflows. Laravel Sail provides a simple command-line interface for managing Docker containers, making Laravel applications easier to deploy and scale.

WebSockets for Real-Time Apps: Technologies like Laravel Echo allow developers to easily implement real-time features in their applications, such as live chats and notifications.

API-First Development: The need for mobile and single-page applications has pushed the adoption of API-first development. Laravel excels with its Lumen micro-framework for creating lightning-fast APIs.

Performance and Scalability

Performance and scalability are crucial factors in choosing between Core PHP and Laravel. While Core PHP may offer raw performance benefits, Laravel's ecosystem contains tools and practices, such as caching and queue management, that help in achieving high scalability and performance for larger applications.

Community Support and Resources

Laravel enjoys robust community support, with a wealth of tutorials, forums, and third-party packages available. Core PHP, being the foundation, also has a vast amount of documentation and community forums. The choice might depend on the type of support and resources a developer is comfortable working with.

PHP 8,3 vs Laravel 10

Comparing the latest versions of PHP (8.3) and Laravel (10) reveals distinct advancements tailored to their respective ecosystems. PHP 8.3 brings enhancements such as Typed Class Constants, dynamic class constant and Enum member fetch support, along with the introduction of new functions like json_validate() and mb_str_pad(), aimed at improving the language's robustness and developer experience. The addition of the #[\Override] attribute further emphasizes PHP's commitment to cleaner code and better inheritance management. On the other side, Laravel 10 updates its arsenal with support for its latest version across various official packages including Breeze, Cashier Stripe, Dusk, Horizon, and others, ensuring a seamless integration and enhanced developer toolkit. These updates focus on enriching Laravel's ecosystem, providing more out-of-the-box features, and improving the development process for web applications. While PHP 8.3 focuses on language level improvements and new functionalities for a broader range of PHP applications, Laravel 10 hones in on refining the framework's capabilities and ecosystem, making web development more efficient and scalable.

Conclusion

The decision between Core PHP and Laravel comes down to the project's specific requirements, the top PHP development companies and their team's expertise, and the desired scalability and performance characteristics. For projects that require rapid development with a structured approach, Laravel stands out with its comprehensive ecosystem and tools. Core PHP remains unbeatable for projects requiring custom solutions with minimal overhead.

In 2024, the trends towards serverless computing, microservices, and API-first development are shaping the PHP development services landscape. Laravel's alignment with these trends makes it a compelling choice for modern web applications. However, understanding Core PHP remains fundamental for any PHP developer, offering unparalleled flexibility and control over web development projects.

Embracing new technologies and staying abreast of trends is crucial, whether choosing Core PHP for its directness and speed or Laravel for its rich features and scalability. The ultimate goal is to deliver efficient, maintainable, and scalable web applications that meet the evolving needs of users and businesses alike.

0 notes

Text

Can Chatbots Write Code? Unveiling the Big Possibilities

As technology continues to advance at an unprecedented pace, the question arises: Can chatbots write code? This intriguing possibility has sparked the curiosity of developers, programmers, and AI enthusiasts alike. Chatbots have come a long way from their early days of simple text-based conversations. With advancements in natural language processing and machine learning, chatbots are now capable of understanding complex queries and providing intelligent responses. But can they take it a step further and actually generate code? Let's explore the potential of chatbots in the world of coding.

The Rise of AI in Programming

Artificial intelligence has made significant strides in various fields, and programming is no exception. AI-powered tools are being developed to assist developers in writing code, debugging, and even generating code snippets. These tools leverage machine learning algorithms to analyze vast amounts of code and learn patterns and best practices. Chatbots, on the other hand, are designed to simulate human-like conversations and provide information or perform tasks based on user input. By combining the capabilities of chatbots with AI-powered programming tools, the possibility of chatbots writing code becomes an intriguing prospect.

Chatbots as Coding Assistants

While chat bots may not be able to write complex applications from scratch, they can certainly assist developers in various coding tasks. Here are some ways chatbots can be utilized as coding assistants: 1. Syntax and Code Suggestions Chat bots can analyze code snippets and provide suggestions for improving syntax, identifying errors, or suggesting alternative approaches. By understanding the context and intent of the code, chatbots can offer valuable insights and help developers write cleaner and more efficient code. 2. Documentation and API Assistance Finding the right documentation or understanding complex APIs can be time-consuming for developers. Chat bots can act as virtual assistants, providing quick access to documentation, explaining usage examples, and answering questions related to APIs. This can significantly speed up the development process and enhance productivity. 3. Code Generation and Automation While chat bots may not be able to write complex code from scratch, they can generate boilerplate code or automate repetitive tasks. For example, a chatbot can generate code templates for common programming patterns or automate the process of setting up a project structure. This can save developers time and effort, allowing them to focus on more critical aspects of their work. 4. Learning and Knowledge Sharing Chat bots can also serve as a platform for learning and knowledge sharing within the developer community. They can provide tutorials, answer coding-related questions, and facilitate discussions among developers. This collaborative environment can foster innovation and help developers stay updated with the latest trends and practices.

The Limitations and Ethical Considerations

While the idea of chatbots writing code is fascinating, it's important to acknowledge their limitations and consider ethical implications. Here are some factors to keep in mind: 1. Creativity and Problem-Solving Coding often requires creativity and problem-solving skills, which are typically associated with human intelligence. While chatbots can assist with routine tasks and provide suggestions, they may struggle with more complex programming challenges that require abstract thinking and innovative solutions. 2. Quality and Reliability The quality and reliability of code generated by chat bots are crucial considerations. Code generated by chatbots may lack the thoroughness and attention to detail that human developers bring. It's essential to thoroughly review and test code generated by chat bots to ensure its correctness and reliability. 3. Ethical Use of AI As with any AI-powered technology, it's important to consider the ethical implications of chatbots writing code. Developers should ensure that chatbots are used responsibly and ethically, respecting intellectual property rights and avoiding plagiarism or unethical practices.

The Future of Chatbots in Coding

While chat bots may not be able to replace human developers entirely, they undoubtedly have the potential to revolutionize the coding process. As AI continues to advance, chat bots will become more intelligent, capable of understanding complex coding concepts, and generating code that meets industry standards. In the future, we can expect chatbots to become indispensable tools for developers, providing real-time assistance, automating repetitive tasks, and facilitating collaboration within the coding community. The possibilities are truly exciting, and we can't wait to see how chatbots shape the future of coding. In conclusion, while chatbots may not be able to write code in the same way human developers do, they can certainly assist and enhance the coding process. By leveraging AI and machine learning, chat bots can provide valuable insights, automate tasks, and foster collaboration among developers. The curious possibilities of chat bots in coding are just beginning to unfold, and the future looks promising. Also Read: 5 Lucrative Ways to Start Using GPT-4: Don’t Miss Out on the Next Big Opportunity to make $$$! Read the full article

0 notes